Agent Skills: Teaching Your AI How to Actually Work

From babysitting commands to fire-and-forget confidence: how Agent Skills transformed my git workflow and why they matter for AI-assisted development.

Two months into AI-assisted development as a senior consultant - the key insights, best practices, and mindset shifts that transformed how I write code.

Two months ago, I fundamentally changed how I write code. As a senior software consultant, I’ve shifted from manually crafting every line to what’s becoming known as “agent coding” - having conversations with AI about what I want to build rather than implementing it myself.

Here are the key insights and best practices I’ve developed over the past two months.

Quick Win: Don’t judge agent coding based on weak models. Having seen internal open source models struggle with basic tasks, I understand why some people aren’t enthusiastic about AI-assisted development.

The Reality: Claude 4 takes it to another level entirely. Gemini 2.5 Pro is also a strong contender - especially when you’re no longer paying Cursor enough for Claude 4 access and get put into slow mode. The difference in code quality, architectural understanding, and constraint-following between top-tier and weaker models is night and day.

Claude’s Edge: Claude seems more “eager” to perform work compared to other models. Where other models will generally not consider associated files, code, or config changes, Claude actively looks for related components that need updating. This proactive approach often saves you from having to explicitly mention every file that needs changes.

The biggest change isn’t in tools - it’s mental. I’ve moved from thinking about low-level implementation details to focusing on what I want to accomplish.

Key Insight: The how doesn’t disappear - I’m now thinking about how at a much higher level: system design, data flow, and overall approach rather than syntax, API calls, and boilerplate code.

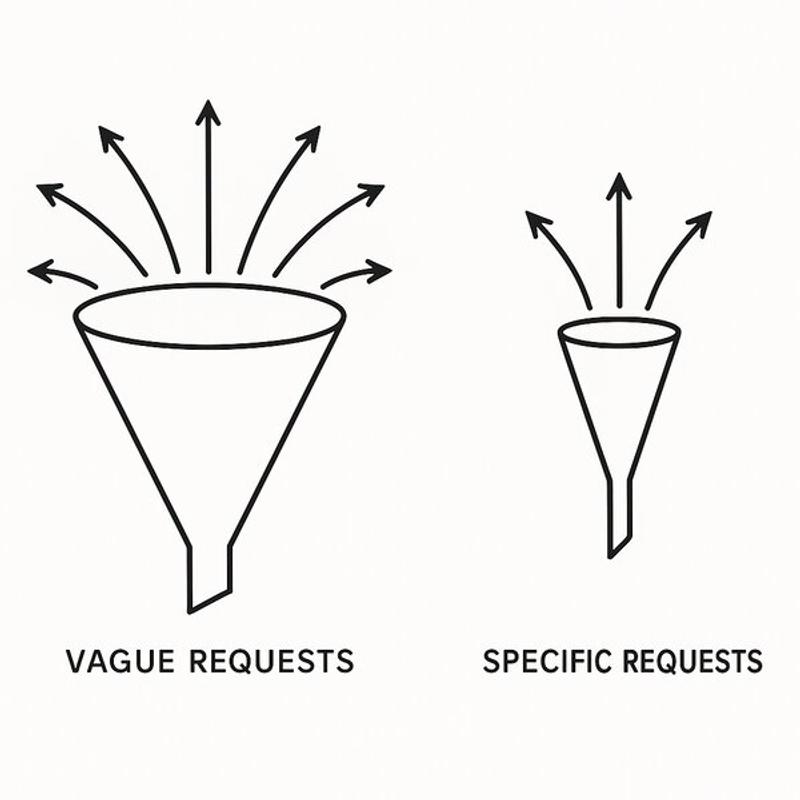

Mental Model: Think of each task as a cone. The broader your request, the wider the top becomes, and the more potential outcomes exist. This is why vague prompts like “build a user system” often produce unsatisfactory results.

Your Role: Narrowing that cone by providing constraints, context, and specificity to guide the AI toward the exact outcome you need.

Too Broad (Wide Cone):

Properly Constrained (Narrow Cone):

The Magic: Each constraint you add eliminates thousands of possible implementations the AI might consider. You’re not limiting creativity - you’re channeling it toward your specific needs.

Start with your intent, then add constraints iteratively:

Credit: This insight comes from a colleague and perfectly frames the relationship.

Practical Application: Just as you’d establish coding standards for a new team member, create rule files for AI. The difference? This “junior developer” implements standards incredibly quickly once they understand them.

Pro Tip: Let the AI write your rule files! Don’t try to make them perfect immediately. You can root out the “flaws” of the LLM iteratively, which is what I do and it works well.

Example: For Angular projects, I specify “use inject.required() instead of old @Inject syntax” in my rule files.

Biggest Early Mistake: Making tasks too large.

Bad: “Build backend changes and matching frontend changes for a new endpoint”

Good: “Build the backend endpoint first, then tackle frontend integration as separate conversation”

Rule: Smaller, focused conversations consistently outperform trying to accomplish everything at once.

Don’t just state what you want - share your architectural thinking.

Template: “I want to [specific goal]. My approach is [high-level strategy]. Let’s start with [first step].“

Quick Wins:

Key: Simple request + good context > complex prompt without context

Search vs. Direct Links: Sometimes I tell the AI to search explicitly - this can be more helpful than pasting random links. But for something like “migrate to Vitest,” I know I want the official guide, so no need to search.

Rule of Thumb: Let AI search for general best practices, provide direct links for specific official documentation.

The Reality: When AI makes an error, it’s a bit of a coin toss whether it’s smarter to “revert” the last step or keep working forward and have it fix the issue.

My Default: Most of the time I use the latter - share the error message and let AI fix it forward.

When to Revert: Sometimes reverting can be smarter, especially when:

Process: Simply rerun with the error message for forward fixes. For reverts, explicitly ask to go back to the previous working state.

Game Changer: Depending on what you’re doing, multiple tabs allow you to work on two things simultaneously.

Critical Rule: They shouldn’t operate on the same files. Cursor doesn’t like that and you’ll run into conflicts.

Use Cases:

Hidden Gem: Don’t overlook Cursor’s tab autocompletion - it’s much smarter than what I’m used to from Continue in IntelliJ.

What Makes It Special: It actually detects your intention and then intelligently jumps to the next related line in the current open file. This creates a smooth flow where you’re not just getting single-line completions, but contextual suggestions that understand the broader changes you’re making.

Workflow Integration: Use this alongside chat conversations - let the chat handle the big architectural decisions and file creation, then use inline suggestions to efficiently fill in the details and related changes.

What I Haven’t Tried: Creating implementation plans ahead of time with the LLM.

The Idea: Instead of having the entire idea in your head (or making it up as you go), first have a conversation about the implementation plan itself, then move to execution.

Tools: RooCode has “architect” mode for this. With Cursor, I’d describe the planning phase in regular mode, then execute.

Potential Benefit: AI might surface insights or considerations you hadn’t thought of during planning.

as any or disabling ESLint rulesFeel: More like collaborative problem-solving than traditional programming. I’m the architect and constraint-setter; AI is my implementation partner.

Agent coding isn’t about eliminating developers or making us obsolete - it’s a powerful new tool that elevates how we work. Instead of eliminating the how, it lets us focus on higher-level how while AI handles detailed implementation within the constraints we define.

We’re still the architects, the decision-makers, and the quality gatekeepers. We’ve just gained an incredibly capable implementation partner.

What’s your experience with AI-assisted coding? I’d love to hear about your own insights and best practices in the comments or through my contact form.

Explore more articles on similar topics

From babysitting commands to fire-and-forget confidence: how Agent Skills transformed my git workflow and why they matter for AI-assisted development.

I spent a few evenings testing Cursor Agents to see if AI-powered development on isolated VMs could actually let you code on the go. Here's what I learned about the promises and limitations of autonomous coding agents.

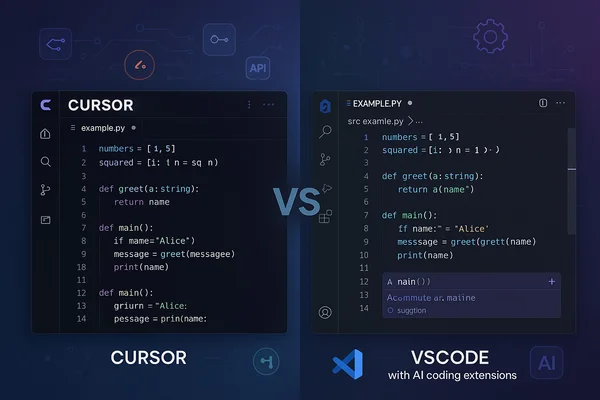

After months of testing Cursor against free VSCode extensions like RooCode and KiloCode, here's an honest breakdown of what actually matters in day-to-day AI-assisted development.