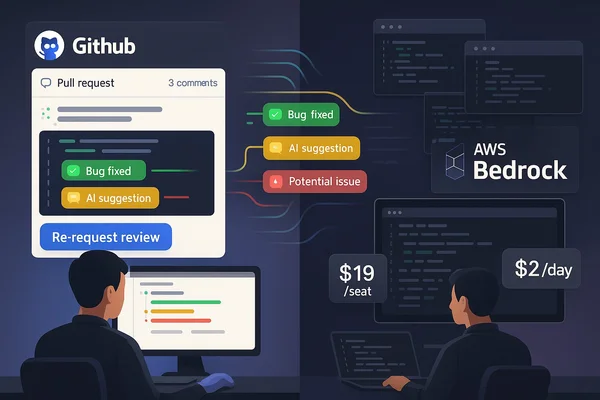

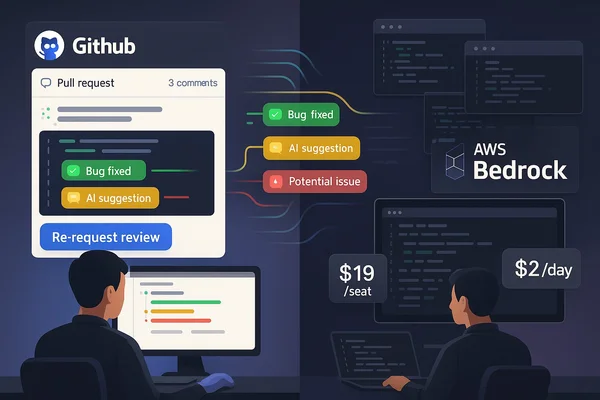

AI Code Reviews: DIY vs Copilot Six Weeks Later

Six weeks running OpenCode and GitHub Copilot in parallel on production code. Real costs, actual bugs caught, and which ...

Announcing aicommits 1.0.0, a milestone release bringing flexible AI provider support, AWS Bedrock integration, and lessons learned from building AI tools with AI.

Today, I’m thrilled to announce that aicommits has reached version 1.0.0. This isn’t just another version bump—it’s a milestone that represents six months of development, countless learning moments, and a journey that fundamentally changed how I think about building software.

You can read the full release notes on GitHub .

There’s something delightfully recursive about building an AI-powered commit message tool while using AI to help you build it. When I started working on aicommits again six months ago, I didn’t anticipate how deeply it would pull me into the world of AI-assisted coding. What began as a tool to solve my own workflow friction became something much more significant: a hands-on education in modern development practices that I couldn’t have gotten any other way.

The experience has been transformative—both technically and professionally. This project became my laboratory, and reaching v1.0.0 feels like graduating from that intense, self-directed program.

Before diving into what’s new, I want to share why this project exists at all. I initially forked aicommits in August 2024 out of genuine frustration with the existing landscape of commit message tools. Every tool I tried had a deal-breaker:

Some offered great configurability but only supported one or two AI providers. Others supported multiple providers but locked you into their defaults—you couldn’t change the base URL, customize model parameters, or adapt the tool to your specific workflow.

I wanted both: the flexibility to use any AI provider I chose and the configurability to make the tool work exactly how I needed it to. That combination didn’t exist, so I built it.

The fork sat relatively dormant for a while, but with the advent of Cursor and AI-assisted development tools, this project really took off. What started as a side project became a genuine passion, and the last six months of active development have been transformative.

The biggest internal change is the migration to Vercel AI SDK v5. Before, adding support for a new AI provider meant: installing that provider’s package, writing an abstraction layer to bridge their API to my internal structure, and then maintaining that abstraction layer over time. Every new provider meant more code I owned, more edge cases to handle, more potential breaking changes when providers updated their SDKs.

That’s now all gone. That maintenance burden is now Vercel’s business, and I for one am happy about it.

The migration replaced all custom AI provider implementations with AI SDK wrappers, now using generateText and streamText from the ‘ai’ package. For a project like aicommits—especially one born from frustration with limited provider support—this foundation is now scalable in ways it wasn’t before, and I’m no longer the bottleneck for adding new providers.

To validate that the Vercel AI SDK migration was worth it, I added AWS Bedrock support. Even with Cursor, this would have taken me 2-3 hours of iterating since I didn’t know much about the AWS Bedrock SDK going in.

Cursor’s planning mode was a welcome addition to my workflow, especially for this task. Instead of doing all that iteration during implementation, the planning feature helped me work through it upfront. Combined with the AI SDK’s standardized interface, what could have been a few hours of trial and error became a more focused implementation.

Bedrock support wasn’t on my initial roadmap for v1.0.0, but because the foundation was right and the planning mode helped smooth the process, I could say “why not?” and ship it .

Here’s where things got a bit messy during development. While migrating to Vercel AI SDK v5, Ollama support was temporarily dropped. The SDK didn’t have native Ollama support at the time, and I made the difficult call to proceed with the migration rather than delay the entire v1.0.0 release.

Then something wonderful happened before v1.0.0 shipped: I discovered the community Ollama provider package that brings Ollama into the Vercel AI SDK ecosystem. Problem solved, and Ollama support was restored before release .

This was a genuine relief because respecting users’ existing configurations matters. This entire project started because I was frustrated with tools that didn’t let me configure what I needed. Developers who chose Ollama often did so for good reasons—privacy, cost control, working offline, or just preferring to keep their tooling local.

So here’s the good news: v1.0.0 ships with full Ollama support. Your existing configs keep working. No migration needed.

Version numbers are somewhat arbitrary, but hitting v1.0.0 feels significant—and not just technically.

This project started in the most mundane way possible: sitting in the Munich airport with a Dell laptop I had to borrow for a US trip because of company policy. I was trying out Cursor during its trial phase, working on this fork, and honestly just experimenting to see what AI-assisted coding could do.

I didn’t expect to fall in love with it.

But that’s exactly what happened. AI-assisted coding gave me something to be genuinely passionate about—a topic I could dive deep into, experiment with, and share with others. And the timing couldn’t have been better. This is, after all, the hottest topic in tech right now, and being able to speak about it with real experience, with a real project backing it up, opened doors I hadn’t anticipated.

At TNG, the consulting firm where I work, this passion translated into visibility. People noticed. The work I was doing on aicommits, the knowledge I was building around AI-assisted development, the willingness to experiment and share what I learned—it all contributed to my recent promotion to Principal Consultant.

And here’s the funny part: I’m now apparently the “top spender” at my company on Cursor tokens. Most of that from side projects like this one.

So when I say v1.0.0 is significant, it’s not just about the code reaching maturity. It’s about what this project enabled: career growth, technical depth, and genuine passion for a space that’s reshaping how we build software.

With the v1.0.0 foundation in place, I’m now working on an agent version of aicommits. The Vercel AI SDK makes this kind of multi-turn interaction much more tractable, and the provider abstraction means users will be able to run the agent version with whatever AI service they prefer.

It’s still under development, and I’m being intentional about not rushing it. But having this solid v1.0.0 foundation means I can explore those ideas without constantly worrying about the base layer.

If you’re curious about AI-assisted commit messages, or if you’ve been using aicommits and want to see what’s new, you can install it globally with:

npm i -g @lucavb/aicommitsThe tool now supports OpenAI, Anthropic, AWS Bedrock, and Ollama out of the box. Whether you’re using cloud providers or running everything locally, with default settings or custom base URLs, there’s a configuration that will work for your workflow.

For more details, check out the tool on npm or the GitHub repository.

Here’s to v1.0.0, and to whatever comes next. 🎉

What projects have you built with AI-assisted development? Has working on AI tools changed your approach to software development? If you have thoughts, suggestions, or experiences using aicommits, I’d love to hear your story.

Explore more articles on similar topics

Six weeks running OpenCode and GitHub Copilot in parallel on production code. Real costs, actual bugs caught, and which ...

Add browser automation to your AI agent with Playwright MCP. See how visual inspection transforms UI debugging from 10+ ...

I spent a few evenings testing Cursor Agents to see if AI-powered development on isolated VMs could actually let you cod...