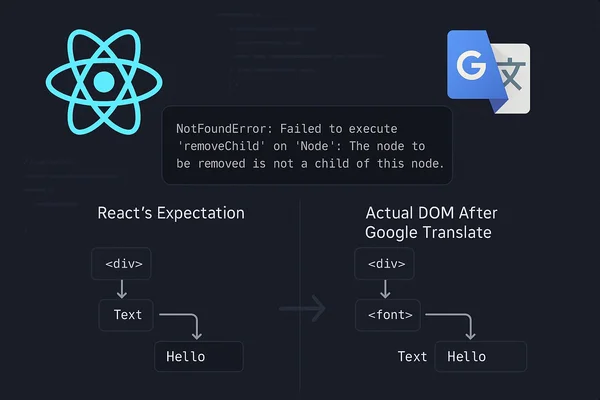

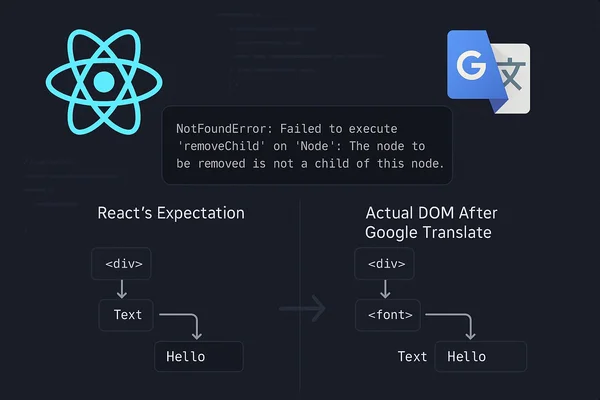

The React Bug That Google Translate Causes

A mysterious production crash you can't reproduce locally. The culprit? Google Translate modifying the DOM behind React'...

Testing GPT-5-Codex against Claude Sonnet 4 and finding that long thinking times and uncooperative behavior kill developer productivity.

OpenAI really can’t quit the name “Codex.” There was the original Codex model, then the Codex VS Code extension, their CLI tool is called Codex, and now their latest “proper” GPT-5 model gets the Codex naming too. At this point, it feels like they’re just running down a checklist of things that need the Codex brand slapped on them.

The latest announcement positions this as major upgrades to their coding capabilities, but does the reality match the marketing?

Nine days after GPT-5-Codex dropped, I finally got my hands on it. OpenAI has been losing ground in the developer space, with most of us reaching for Claude Sonnet 4 more often than not. I was curious to see if their latest offering could change that dynamic. I’d caught a few YouTube reviews, but nothing beats actually trying to build something with it.

I like testing these models on my personal projects since there are no corporate restrictions on AI usage there. So I decided to spend a couple hours putting GPT-5-Codex through its paces on three tasks for my homepage. Nothing I desperately needed, but real enough work to get a proper feel for the model. I ran the same tasks through Claude Sonnet 4 to see how they compared.

Spoiler alert: the thinking times alone made me doubt its value.

Getting your hands on GPT-5-Codex is a bit difficult right now. OpenAI kept it locked away from the API for eight days till the 23rd of September. When it finally showed up in the /models endpoint, I thought “great, let’s try this thing.”

Nope. They decided to use a completely different endpoint. Instead of the usual /chat/completions that most tools expect, GPT-5-Codex is available through the newer /responses endpoint. So now all your favorite AI tools just… don’t work. LiteLLM is trying to catch up, but until they do, you’re stuck writing custom API calls or hoping someone builds a bridge.

If you’re using Cursor like me, congrats—they built in native support from day one. Everyone else gets to write hacky workarounds just to send a simple request.

On paper, the specs sound impressive. 272k context is nice—more is always better here—but 200k on Claude Sonnet 4 isn’t small either. As I learned the hard way, big numbers don’t matter if the experience makes you want to throw your laptop out the window.

My first test was building a reading time indicator for blog posts. Both models delivered working solutions, but their approaches revealed subtle differences in how they think about code architecture.

Claude Sonnet 4 went for simplicity. It created a clean utility function in its own dedicated file, stripped markdown artifacts and front-matter, counted words, and divided by 225 words per minute. The whole thing took maybe 30 seconds to generate, and the code was straightforward—easy to understand, easy to maintain.

GPT-5-Codex? First, I had to wait. One minute and thirty-three seconds of “thinking.” When it finally delivered, the approach was more sophisticated. It factored in images (adding 12 seconds each) and code blocks (treating them as roughly 80 words each), used stronger TypeScript interfaces, and even added a styled gradient pill to the UI. But it dumped everything into the existing utils barrel file instead of keeping things organized.

Since both solutions worked, I had o3 judge them objectively. The analysis was interesting and close. GPT-5-Codex scored slightly higher overall (0.82 vs 0.80) thanks to its more accurate reading time estimates and better type safety. It properly accounted for images and code blocks that Claude Sonnet 4 completely ignored, and its structured IReadingTime interface was more extensible than Claude Sonnet 4’s simple number.

But Claude Sonnet 4 won on code organization and maintainability. Its dedicated utility file kept concerns separated, made the code easier to tree-shake, and followed better architectural patterns. GPT-5-Codex’s approach of stuffing everything into a barrel file was “messier” and could be considered harder to maintain long-term.

But for all that extra thinking time and marginal quality improvement, the developer experience was dramatically worse. Those 90+ seconds of waiting killed any coding momentum I had.

Both commits implement an estimated reading time feature for blog posts. They touch the same five areas of the code-base but differ substantially in approach, type-safety, and attention to project conventions.

| Aspect | Claude Sonnet 4 (36e8973) | GPT-5-Codex (88136af) |

|---|---|---|

| Utility location | New dedicated file src/utils/readingTime.ts | Adds function to the existing barrel src/utils/index.ts |

| Algorithm | • Strips front-matter, imports, HTML/MD syntax • Counts words, divides by 225 wpm | • Cleans markdown & HTML • Adds extra time for images (12 s ea.) & code blocks (≈ 80 words ea.) |

| Return type | { minutes: number; words: number } | Same structure but wrapped in IReadingTime interface |

| Types | Adds readingTime: number to IBlogPost | Adds readingTime: IReadingTime (minutes + words) — more expressive |

| BlogCard UI | Shows “{readingTime} min read” | Shows “{readingTime.minutes} min read” inside a stylised pill |

| Adherence to repo structure | Utility in its own file — clear separation | Mixed concerns in large barrel; increases bundle size |

| Comments / verbosity | Heavy block comments; could violate “no-BS” guideline | Minimal inline comments — closer to user preference |

utils/index.ts slim.readingTime, which loses word-count detail.IReadingTime make it more extensible.Both implementations are functional, but target different trade-offs:

Overall score (subjective):

| Criteria | Weight | Claude Sonnet 4 | GPT-5 |

|---|---|---|---|

| Correctness | 0.4 | 0.8 | 0.85 |

| Code quality / maintainability | 0.3 | 0.85 | 0.75 |

| Conformity to project conventions | 0.2 | 0.8 | 0.75 |

| Style (minimal comments) | 0.1 | 0.7 | 0.9 |

| Total | 1.0 | 0.80 | 0.82 |

GPT-5 has a slight edge due to stronger modeling, but either patch could be merged with minor tweaks.

This was about building a different gradient for the hero section of my homepage. Codex, in the summary, even messed up the trip backtick formatting in Cursor, and also refused to open the web browser. It really has its own mind.

When I tried again, GPT-5-Codex built a JS-based version, which I didn’t want, so I had to prompt it again. It did eventually produce an interesting animation for dark mode, but failed to adapt it for light mode—kinda disappointing.

Claude Sonnet 4 just outright broke my hero section entirely, and there were no animations at all. These LLMs are still really bad at designing or writing CSS. While humans struggle with CSS too, the AI models seem to struggle even more.

Claude Sonnet 4 at least opened the browser using Playwright, but the Playwright MCP server didn’t help with the design either.

The third task—interactive cards for an “about me” section—became the most frustrating experience of the entire session.

GPT-5-Codex spent three minutes and eleven seconds thinking about this one. Three minutes! I literally could have gotten up, made coffee (if I drank any), checked my email, and came back to find it still churning. When the response finally arrived, it was genuinely disappointing. The cards looked generic, the hover effects were basic, and the responsive behavior was buggy on mobile.

Claude Sonnet 4 knocked out a similar solution in the usual time frames. The styling was a bit better, the interactions felt a little bit more polished, but over all not the cleanest solution either.

Both models struggled with this task. At least Claude Sonnet 4 disappoints you quickly rather than making you wait three minutes for mediocre results.

Yes, those brutal thinking times are a productivity killer but it being some uncooperative became also more annoying. GPT-5-Codex has developed what I can only describe as selective hearing. It regularly ignores subtle instructions that Claude Sonnet 4 would eagerly act on.

Claude Sonnet 4 is apparently trained to be much more responsive to hints—when you mention “maybe we should check how other sites handle this,” it jumps on those suggestions and fires up the appropriate tools. GPT-5-Codex treats these nudges like background noise. Sometimes it goes beyond ignoring suggestions and flat-out refuses them. When I used Codex to fix the issues in the Zodios project due to the Zod v4 upgrade, it essentially told me “no, you are wrong, this is not needed” and just… stopped. I wanted to improve all the typings while we were at it, but GPT-5-Codex decided my desire to improve the type safety wasn’t worth it.

This selective approach might sound more thoughtful in theory, but there’s a difference between being thoughtful and being uncooperative. The irony is that while it spends minutes thinking about every response, it somehow misses the most important signal of all: what the human actually wants.

After spending those hours with both models, my recommendation is straightforward: stick with Claude Sonnet 4.

GPT-5-Codex does show some technical advantages—better type safety, more sophisticated algorithms, attention to edge cases that Claude Sonnet 4 misses. But these marginal improvements get completely overshadowed by the dragged-out user experience and uncooperative behavior.

If you have time to burn and want to experiment with OpenAI’s latest, go ahead and give GPT-5-Codex a try. Maybe you’ll find use cases where those extended thinking sessions pay off—particularly for agent background tasks like automated workflows or code review systems where responsiveness isn’t crucial. But for interactive development work, especially anything requiring quick iteration, it’s hard to recommend.

OpenAI is clearly trying to regain ground in the developer space with this release. The underlying capabilities are solid, and when it gets things right, it can be on par with Claude Sonnet 4. But until they figure out how to deliver that capability without making developers wait multiple minutes for every response—and actually cooperate with developer intent—Claude Sonnet 4 remains the better choice for getting actual work done.

At this rate, OpenAI is going to need another Codex to get the balance right.

Have you tried GPT-5-Codex yet? How do you feel about the extended thinking times versus the quality improvements? Let me know in the comments below.

Explore more articles on similar topics

A mysterious production crash you can't reproduce locally. The culprit? Google Translate modifying the DOM behind React'...

A retrospective on six months of AI-assisted coding with Cursor - how Planning Mode became a brainstorming partner, why ...

Two months into AI-assisted development as a senior consultant - the key insights, best practices, and mindset shifts th...