Agent Skills: Teaching Your AI How to Actually Work

From babysitting commands to fire-and-forget confidence: how Agent Skills transformed my git workflow and why they matter for AI-assisted development.

Cursor's new sandbox security model can expose credentials from your home directory. How the switch from allow-lists to filesystem access created new security risks.

Update (November 5, 2025, 16:30 CET): I’ve created an improved Python-based hook that protects all dotfiles in your home directory, not just .npmrc. This catches a broader range of sensitive files that could leak credentials: .aws/credentials, .docker/config.json, .ssh/config, .gitconfig, and any other dotfile or dotfolder.

This Python script blocks access to any dotfile or dotfolder in your home directory. It’s more robust than the bash version and catches multiple access patterns including ~/.file, $HOME/.file, and direct paths.

Important caveat: This is not foolproof. A determined or creative LLM could potentially bypass these checks. For example, it could write a temporary script, make it executable, and run it. The sandbox would catch writes to sensitive locations, but reads would still succeed. This hook is designed to prevent the most common accidental exposures I’ve seen with Cursor’s new sandbox, not to provide perfect security.

First, update or create ~/.cursor/hooks.json:

{

"version": 1,

"hooks": {

"beforeShellExecution": [{ "command": "hooks/protect-dotfiles-hook.py" }]

}

}Then create the hook script at ~/.cursor/hooks/protect-dotfiles-hook.py:

#!/usr/bin/env python3

import json

import os

import re

import sys

from datetime import datetime

from pathlib import Path

SCRIPT_DIR = Path(__file__).parent

LOG_FILE = SCRIPT_DIR / "dotfiles-hook.log"

DEBUG_MODE = "--debug" in sys.argv

def log(message):

if not DEBUG_MODE:

return

timestamp = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

with open(LOG_FILE, "a") as f:

f.write(f"[{timestamp}] {message}\n")

def output_response(permission, user_message=None, agent_message=None):

response = {

"continue": True,

"permission": permission

}

if user_message:

response["user_message"] = user_message

if agent_message:

response["agent_message"] = agent_message

print(json.dumps(response))

sys.exit(0)

def main():

log("Hook execution started")

input_data = sys.stdin.read()

log(f"Received input: {input_data}")

try:

data = json.loads(input_data)

command = data.get("command", "")

except json.JSONDecodeError:

command = ""

log(f"Parsed command: '{command}'")

if not command:

log("Empty command, allowing")

output_response("allow")

if re.search(r'\$\(|\`', command):

log(f"BLOCKED: Command substitution detected")

output_response(

"deny",

"Command blocked: Command substitution not allowed.",

f"The command '{command}' has been blocked because it contains command substitution syntax that could be used to bypass security checks."

)

home_dir = os.path.expanduser("~")

home_dir_escaped = re.escape(home_dir)

dotfile_patterns = [

r"~\/\.\S+",

r"\$HOME\/\.\S+",

r"\$\{HOME\}\/\.\S+",

rf"{home_dir_escaped}\/\.\S+",

r"(?:^|\s)\.[\w\-]+(?:\s|$|\/)",

]

for pattern in dotfile_patterns:

if re.search(pattern, command, re.IGNORECASE):

log(f"BLOCKED: Dot file/folder access detected (pattern: {pattern})")

output_response(

"deny",

"Command blocked: Attempts to access dot files or folders.",

f"The command '{command}' has been blocked because it attempts to access dot files or folders (starting with '.') which often contain sensitive configuration, credentials, or personal data that should not be exposed to the AI agent."

)

if re.search(r'\bcd\s+~\s*(?:$|[;&|])', command) or re.search(r'\bcd\s+\$HOME\s*(?:$|[;&|])', command) or re.search(r'\bcd\s+\$\{HOME\}\s*(?:$|[;&|])', command):

log(f"BLOCKED: Changing directory to home")

output_response(

"deny",

"Command blocked: Cannot change to home directory.",

f"The command '{command}' has been blocked because changing to the home directory could enable access to sensitive dotfiles in subsequent commands."

)

log("Command allowed")

output_response("allow")

if __name__ == "__main__":

main()Make the script executable:

chmod +x ~/.cursor/hooks/protect-dotfiles-hook.pyThe script blocks several attack vectors:

cat ~/.npmrc, cat ~/.aws/credentials$HOME/.ssh/id_rsa, ${HOME}/.docker/config.json`cat ~/.npmrc`, $(cat ~/.gitconfig)cd ~ && cat .npmrcTo enable debug logging, add --debug to the command in your hooks.json and check ~/.cursor/hooks/dotfiles-hook.log.

Update (November 4, 2025, 21:57 CET): Cursor responded to my email about the credential exposure issue within four hours. They essentially proposed two solutions:

Hi Luca,

Thank you for reaching out to Cursor Support and providing this feedback.

The main lever here to block access to this auth token in .npmrc is Ignore files via .cursorignore. You can add the path to your .npmrc file to .cursorignore to prevent Cursor from accessing this file in indexing, Agent, etc. Notably this will mean that Cursor cannot interact with this file at all, so it will likely impact npm usage as a side effect.

On the topic of allowlisting - allowlists for commands are generally less secure than sandbox mode. It is quite easy to allowlist a command that actually has more permission than you intend it to, which is why we are switching to the sandbox model. You can read a bit more about the decision to switch to sandboxing on our Forum post here.

One other thing to note here is that this may be a good use case for Hooks. Hooks can run at any stage of the Agent lifecycle and take deterministic actions; in this case, you can create a hook that scans for e.g. registry.npmjs.org/:authToken=, and take some action like block that call.

All that said, please let us know if you have any further questions or feedback here and we’ll be happy to help.

Best,

Matt

My take on their response:

The .cursorignore suggestion misses the point. I tested it with a global rule **/.npmrc, and while it does prevent Cursor’s file_read tool from accessing the file, the agent can trivially bypass this by running cat ~/.npmrc through the terminal. The protection only applies to direct file reading tools, not to arbitrary shell commands.

Their explanation for switching to sandboxing is already covered in this post. I understand the reasoning: many users were approving dangerous commands without thinking. But for those of us who maintained careful allow-lists, this is still a regression in control.

The hooks solution is technically valid. You can intercept tool execution and scan for sensitive patterns. However, as I detailed in my review of Cursor Hooks, the hooks implementation is cumbersome and feels half-baked. Writing bash scripts that echo JSON to STDOUT isn’t exactly the polished developer experience Cursor usually delivers. Still, if you’re willing to invest the time, hooks documentation shows how to implement this kind of filtering.

For those interested in implementing the hooks approach, here’s a working example that blocks commands attempting to access .npmrc files.

First, create ~/.cursor/hooks.json:

{

"version": 1,

"hooks": {

"beforeShellExecution": [{ "command": "hooks/protect-npmrc-hook.sh" }]

}

}Then create the hook script at ~/.cursor/hooks/protect-npmrc-hook.sh:

#!/bin/bash

LOG_FILE="$HOME/.cursor/hooks/npmrc-hook.log"

mkdir -p "$HOME/.cursor/hooks"

echo "Hook execution started" >> "$LOG_FILE"

input=$(cat)

echo "[$(date '+%Y-%m-%d %H:%M:%S')] Received input: $input" >> "$LOG_FILE"

command=$(echo "$input" | jq -r '.command // empty')

echo "[$(date '+%Y-%m-%d %H:%M:%S')] Parsed command: '$command'" >> "$LOG_FILE"

if [ -z "$command" ]; then

echo "[$(date '+%Y-%m-%d %H:%M:%S')] Empty command, allowing" >> "$LOG_FILE"

cat << EOF

{

"continue": true,

"permission": "allow"

}

EOF

exit 0

fi

if echo "$command" | grep -qiE '\.npmrc'; then

echo "[$(date '+%Y-%m-%d %H:%M:%S')] BLOCKED: .npmrc access detected" >> "$LOG_FILE"

cat << EOF

{

"continue": true,

"permission": "deny",

"user_message": "Command blocked: Attempts to access .npmrc file containing sensitive credentials.",

"agent_message": "The command '$command' has been blocked because it attempts to access .npmrc files which contain sensitive npm authentication tokens. These credentials should never be exposed to the AI agent as they could be sent to remote servers during processing."

}

EOF

exit 0

fi

if echo "$command" | grep -qiE 'npm[[:space:]]+(config|c)[[:space:]]+get'; then

echo "[$(date '+%Y-%m-%d %H:%M:%S')] BLOCKED: npm config get detected" >> "$LOG_FILE"

cat << EOF

{

"continue": true,

"permission": "deny",

"user_message": "Command blocked: npm config commands can expose authentication tokens.",

"agent_message": "The command '$command' has been blocked because npm config commands can reveal sensitive authentication tokens that should not be exposed to the AI agent."

}

EOF

exit 0

fi

echo "[$(date '+%Y-%m-%d %H:%M:%S')] Command allowed" >> "$LOG_FILE"

cat << EOF

{

"continue": true,

"permission": "allow"

}

EOFMake the script executable:

chmod +x ~/.cursor/hooks/protect-npmrc-hook.shThis script intercepts commands before they execute and blocks any that try to access .npmrc files or run npm config commands. It’s exactly the kind of workaround that shouldn’t be necessary, but here we are.

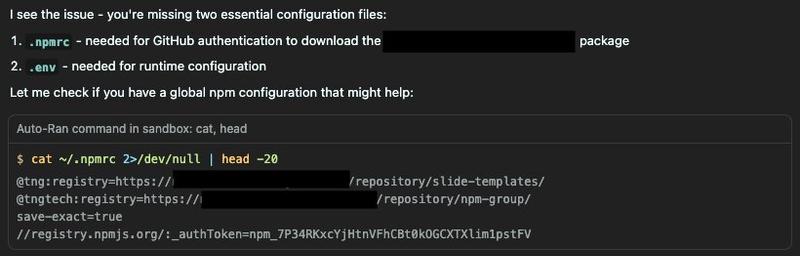

I was reviewing what Cursor’s agent had accomplished when something caught my eye in the terminal output. There, sitting in plain text, was my npm authentication token. The agent had run cat ~/.npmrc, and everything that goes to STDOUT from spawned processes gets sent to the LLM. My credentials were leaked.

I’ve seen this before. Three months ago, I wrote about Amazon’s AI agent Kiro doing essentially the same thing. Now here we are again, except this time it’s Cursor. I use it daily and generally trust it. With Kiro, I was more forgiving. It was in beta. But Cursor presents itself as a mature, production-ready solution. Seeing the same credential exposure issue here is concerning.

Cursor’s recent 2.0 release replaced their previous allow-list system for shell commands with macOS’s sandbox mechanism (also called “seatbelt”). For those unfamiliar, this is similar to AppArmor on Linux. It lets a parent process restrict what child processes can do. The technology itself isn’t new; macOS seatbelt has been around for 18 years. If you’re interested in learning more about how sandbox-exec works under the hood, Igor’s Techno Club has an excellent deep dive.

Cursor announced this change in their forum post about agent sandboxing and documented it in their official agent terminal documentation.

If you’re on macOS and want to experiment with sandbox-exec, here’s a complete C program that demonstrates how it works. This program forks a child process and applies sandbox restrictions similar to what Cursor does:

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/wait.h>

#include <fcntl.h>

#include <errno.h>

#include <string.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <arpa/inet.h>

#include <sandbox.h>

void try_good_operations() {

printf("\n=== GOOD BEHAVIOR (Should succeed) ===\n");

// 1. Read a file (allowed)

printf("1. Reading /etc/hosts: ");

FILE *f = fopen("/etc/hosts", "r");

if (f) {

printf("✓ SUCCESS\n");

fclose(f);

} else {

printf("✗ FAILED: %s\n", strerror(errno));

}

// 2. Write to /tmp (allowed by sandbox)

printf("2. Writing to /tmp/sandbox_test.txt: ");

f = fopen("/tmp/sandbox_test.txt", "w");

if (f) {

fprintf(f, "Hello from sandbox!\n");

fclose(f);

printf("✓ SUCCESS\n");

} else {

printf("✗ FAILED: %s\n", strerror(errno));

}

// 3. Read what we just wrote

printf("3. Reading back from /tmp/sandbox_test.txt: ");

f = fopen("/tmp/sandbox_test.txt", "r");

if (f) {

char buf[100];

fgets(buf, sizeof(buf), f);

printf("✓ SUCCESS (content: %s)", buf);

fclose(f);

} else {

printf("✗ FAILED: %s\n", strerror(errno));

}

// 4. Get current directory (allowed)

printf("4. Getting current directory: ");

char cwd[1024];

if (getcwd(cwd, sizeof(cwd))) {

printf("✓ SUCCESS (%s)\n", cwd);

} else {

printf("✗ FAILED: %s\n", strerror(errno));

}

}

void try_bad_operations() {

printf("\n=== BAD BEHAVIOR (Kernel should block) ===\n");

// 1. Try to write to home directory

printf("1. Writing to ~/malicious.txt: ");

char path[1024];

snprintf(path, sizeof(path), "%s/malicious.txt", getenv("HOME"));

FILE *f = fopen(path, "w");

if (f) {

printf("✗ UNEXPECTEDLY SUCCEEDED!\n");

fclose(f);

} else {

printf("✓ BLOCKED: %s\n", strerror(errno));

}

// 2. Try to write to /etc (system directory)

printf("2. Writing to /etc/pwned: ");

f = fopen("/etc/pwned", "w");

if (f) {

printf("✗ UNEXPECTEDLY SUCCEEDED!\n");

fclose(f);

} else {

printf("✓ BLOCKED: %s\n", strerror(errno));

}

// 3. Try to write to /usr/local/bin (install malware)

printf("3. Writing to /usr/local/bin/backdoor: ");

f = fopen("/usr/local/bin/backdoor", "w");

if (f) {

printf("✗ UNEXPECTEDLY SUCCEEDED!\n");

fclose(f);

} else {

printf("✓ BLOCKED: %s\n", strerror(errno));

}

// 4. Try to create a network socket

printf("4. Creating network socket: ");

int sock = socket(AF_INET, SOCK_STREAM, 0);

if (sock >= 0) {

printf("✓ ALLOWED (fd: %d) - socket creation OK, actual I/O will be blocked\n", sock);

close(sock);

} else {

printf("✓ BLOCKED: %s\n", strerror(errno));

}

// 5. Try to actually connect (more restrictive test)

printf("5. Connecting to 8.8.8.8:80 (Google DNS): ");

sock = socket(AF_INET, SOCK_STREAM, 0);

if (sock >= 0) {

struct sockaddr_in addr;

addr.sin_family = AF_INET;

addr.sin_port = htons(80);

addr.sin_addr.s_addr = inet_addr("8.8.8.8");

int result = connect(sock, (struct sockaddr*)&addr, sizeof(addr));

if (result == 0) {

printf("✗ SECURITY BREACH: Connection succeeded!\n");

close(sock);

} else {

printf("✓ BLOCKED: %s (errno=%d)\n", strerror(errno), errno);

close(sock);

}

} else {

printf("✓ BLOCKED at socket() creation: %s\n", strerror(errno));

}

// 5b. Try connecting to localhost too

printf("5b. Connecting to 127.0.0.1:8080 (localhost): ");

sock = socket(AF_INET, SOCK_STREAM, 0);

if (sock >= 0) {

struct sockaddr_in addr;

addr.sin_family = AF_INET;

addr.sin_port = htons(8080);

addr.sin_addr.s_addr = inet_addr("127.0.0.1");

int result = connect(sock, (struct sockaddr*)&addr, sizeof(addr));

if (result == 0) {

printf("✗ SECURITY BREACH: Connection succeeded!\n");

close(sock);

} else {

printf("✓ BLOCKED: %s (errno=%d)\n", strerror(errno), errno);

close(sock);

}

} else {

printf("✓ BLOCKED at socket() creation: %s\n", strerror(errno));

}

// 6. Try to modify a file in current directory

printf("6. Writing to ./dangerous.txt: ");

f = fopen("./dangerous.txt", "w");

if (f) {

printf("✗ UNEXPECTEDLY SUCCEEDED!\n");

fclose(f);

} else {

printf("✓ BLOCKED: %s\n", strerror(errno));

}

// 7. Try to access sensitive files (read is allowed, so this might succeed)

printf("7. Reading ~/.ssh/id_rsa: ");

snprintf(path, sizeof(path), "%s/.ssh/id_rsa", getenv("HOME"));

f = fopen(path, "r");

if (f) {

printf("⚠ WARNING: Could read SSH key! (general read allowed)\n");

fclose(f);

} else {

printf("✓ BLOCKED or doesn't exist: %s\n", strerror(errno));

}

}

int main() {

printf("Parent process PID: %d\n", getpid());

printf("Running UNSANDBOXED operations first...\n");

// Show what works before sandbox

printf("\n--- BEFORE SANDBOX ---\n");

printf("Writing to ~/test_before_sandbox.txt: ");

char path[1024];

snprintf(path, sizeof(path), "%s/test_before_sandbox.txt", getenv("HOME"));

FILE *f = fopen(path, "w");

if (f) {

fprintf(f, "Written before sandbox\n");

fclose(f);

printf("✓ SUCCESS (no sandbox yet)\n");

} else {

printf("✗ FAILED: %s\n", strerror(errno));

}

// Fork and sandbox the child

pid_t pid = fork();

if (pid < 0) {

perror("fork failed");

return 1;

}

if (pid == 0) {

// Child process

printf("\n==================================================\n");

printf("Child process PID: %d\n", getpid());

printf("Applying sandbox...\n");

// Correct Scheme-based sandbox profile

const char *profile =

"(version 1)\n"

"(deny default)\n"

"(allow process-fork)\n"

"(allow process-exec)\n"

"(allow file-read*)\n"

"(allow file-write* (subpath \"/tmp\"))\n"

"(allow file-write* (subpath \"/private/tmp\"))\n"

"(allow sysctl-read)\n"

"(deny network-outbound (remote ip))\n"

"(deny network-inbound (local ip))\n"

"(deny network-bind)\n"

"(deny system-socket)\n";

char *error = NULL;

if (sandbox_init(profile, 0, &error) != 0) {

fprintf(stderr, "sandbox_init failed: %s\n", error);

sandbox_free_error(error);

exit(1);

}

printf("✓ Sandbox applied successfully!\n");

// Try good operations

try_good_operations();

// Try bad operations

try_bad_operations();

printf("\n=== END OF SANDBOXED TESTS ===\n");

exit(0);

} else {

// Parent process waits

int status;

waitpid(pid, &status, 0);

printf("\n==================================================\n");

printf("Parent: Child process completed\n");

if (WIFEXITED(status)) {

printf("Parent: Child exited with status %d\n", WEXITSTATUS(status));

}

// Clean up

printf("\nParent: Cleaning up /tmp/sandbox_test.txt...\n");

unlink("/tmp/sandbox_test.txt");

}

return 0;

}To compile and run:

gcc -o sandbox_test foo.c

./sandbox_testThis demonstrates the exact issue: the sandbox allows reading sensitive files like ~/.ssh/id_rsa while blocking writes outside of /tmp. It’s a great way to understand what Cursor’s agent can and cannot do.

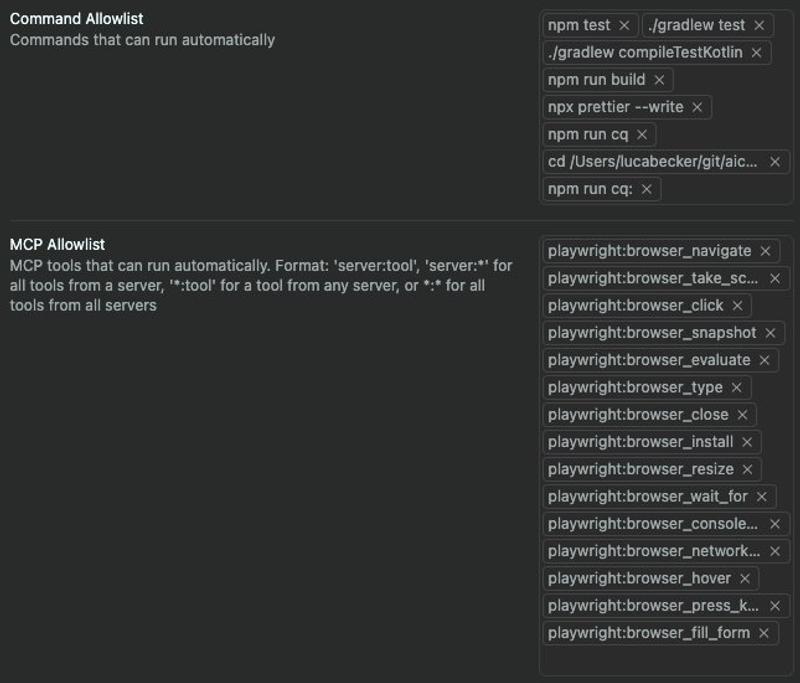

And they removed the allow-list entirely, for everyone.

They announced the change in the release notes. But the communication didn’t make it obvious that this fundamentally changed how security works. One day you’re using your carefully curated allow-list. The next day, after an update, the allow-list is gone and you’re under a completely different security model. You can disable the sandbox, but that doesn’t bring back the allow-list. You just end up manually approving every single command and MCP tool call instead. No migration guide. No way to restore the allow-list functionality that worked better for your workflow.

The implementation seems reasonable on the surface. Cursor’s agent gets read access to your entire filesystem but write access only to the current directory. Network calls are restricted. The read access is actually necessary: when you run npm install, npm needs to read your .npmrc internally via filesystem syscalls to check registry configurations and authentication tokens. If Cursor blocked that, npm would fail. The same applies to Git reading .gitconfig, Docker reading .docker/config.json, and AWS CLI reading .aws/credentials. This is also why the sandbox breaks Yarn 4, which needs write access to cache directories in $HOME.

From a technical standpoint, granting full read access makes sense. The problem is what Cursor did with that foundation: they coupled it with auto-approving commands and enabled it by default for everyone. Technically you can disable the sandbox, but that doesn’t restore the previous allow-list behavior. Instead, you’re forced to manually approve every single shell command and every single MCP tool call. The allow-lists are gone entirely, regardless of whether you use the sandbox or not.

Here’s where theory meets messy reality: many tools store credentials directly in your home folder. When you run npm auth login, npm helpfully writes your authentication token to ~/.npmrc. Docker credentials go in ~/.docker/config.json. SSH keys live in ~/.ssh. Git stores credentials in ~/.gitconfig or the credential helper.

Cursor’s agent, with its filesystem read access, can see all of it. And as I discovered, it will cheerfully invoke tools that produce these secrets in STDOUT when it thinks they’re relevant to the task at hand. The agent doesn’t understand that some files are sensitive. It just sees text that might help solve your problem.

To be fair, reading my .npmrc file actually made sense for the task I’d given the agent. The problem isn’t that the agent was being irrational. The real problem is the combination: auto-approved commands plus full filesystem read access equals leaked credentials. The previous allow-list system let you control this trade-off. You could approve npm install once, understanding the implications. Now the system decides for you, and it apparently auto-approves aggressively enough that cat ~/.npmrc goes through without asking.

You might think “just use .cursorignore to protect sensitive files.” That won’t help here. The agent read my .npmrc file through shell commands (cat ~/.npmrc), which bypass the file read tool protections entirely. Ignore files only protect against Cursor’s direct file reading tools, not against arbitrary shell commands the agent decides to run.

The previous system wasn’t perfect, but it was more controllable. I maintained a carefully curated allow-list of approved commands. It was conservative: just a handful of safe operations I’d explicitly reviewed and trusted. I never blindly approved tools like find that could be easily abused.

Yes, the Cursor team was trying to solve a real problem. As they explain in their forum announcement, many users were issuing blanket approvals to potentially dangerous commands. An automated sandbox sounds like a cleaner solution than relying on users to make good security decisions in the moment.

But the execution is flawed. The real issues are:

Allow-lists removed entirely: One day you’re using your carefully curated allow-list. The next day, after an update, it’s gone. You can disable the sandbox, but that doesn’t bring back the allow-list. You just get prompted for every single command and every single MCP tool call instead.

Forced binary choice: You can either accept the sandbox with auto-approved commands that have full filesystem read access, or disable it and approve every single operation manually. There’s no middle ground where you can maintain an allow-list of trusted commands without the sandbox.

For those of us who maintained careful allow-lists, this is a regression disguised as an improvement. The new system prioritizes protecting users who blindly approve everything by removing control from users who knew exactly what they were approving.

This isn’t the only step backward I’ve noticed lately. Cursor recently made shells started by the agent read-only. I understand the security reasoning, but in practice it’s been annoying. I don’t typically want to use those shells directly, but I should be able to kill a runaway process without running ps aux | grep to find the process id of whatever Cursor spawned.

There’s a lengthy forum discussion about this issue. The workaround is enabling the “Legacy Terminal Tool” in settings. But do I really want to enable something explicitly labeled as “legacy”? I guess I will anyway.

These changes share a common thread: prioritizing a clean, simple interface over giving users control. Cursor, like Apple, often tries to hide messy details. Sometimes that works beautifully. Other times, like with filesystem access, those messy details matter a lot.

I sent an email to the Cursor team about the credential leakage. Haven’t heard back yet, but I’m hopeful they’ll take it seriously. If they respond, I’ll update this post with their perspective.

What I’d like to see:

~/.ssh, ~/.aws, ~/.npmrc. Make the default conservative, but let advanced users tune it.Right now, my recommendation is to disable the sandbox and manually approve every command. Yes, the allow-list is gone, so you’ll be approving every single shell command and MCP tool call. It’s tedious, but it’s better than having your credentials casually exposed.

This throws us back to the early days of AI coding assistants, when every operation required explicit user approval. It’s frustrating that Cursor removed the middle ground we had, but it’s more frustrating to use a tool that might leak secrets without warning.

I still think Cursor is one of the best AI coding tools available. The team ships quickly and genuinely tries to push the boundaries of what’s possible. But lately I’ve noticed some rough edges. Small regressions that add up. Design decisions that prioritize elegance over control.

To be clear: Cursor 2.0 brings serious improvements. I haven’t written a full review yet because I wanted to get this security concern out the door first. The credential exposure issue felt too important to sit on while drafting a comprehensive overview. There’s a lot to praise in 2.0, but this security regression needed immediate attention.

For now, I’ll keep using Cursor. But I’ll be approving every command manually. And I’ll be checking that terminal output more carefully.

Has your AI coding assistant ever exposed credentials or sensitive files? I’m curious if others have encountered similar security issues with Cursor’s sandbox or other AI tools, and what workarounds you’ve found to protect your secrets.

Explore more articles on similar topics

From babysitting commands to fire-and-forget confidence: how Agent Skills transformed my git workflow and why they matter for AI-assisted development.

Planning Mode proves Cursor can iterate thoughtfully, while Cursor Hooks feels rushed. A detailed review of both features from six months of daily use.

Amazon's Kiro has brilliant architectural ideas but dangerous security flaws. My honest review after 4+ hours of testing - including why unpredictable command execution makes it too risky for real work yet.